Did you know Texas is inherently more trustworthy than California?

It’s not, but that’s certainly the perception Meta CEO Mark Zuckerberg would like us to believe. He’s even pretty clear he doesn’t believe it, he just cares about perception.

Today, the world’s biggest social media company announced a stunning reversal in its approach toward speech and moderation in the aftermath of Donald Trump’s re-election to the presidency.

Content Moderation

First, Meta will cease its fact-checking initiatives across Facebook, Instagram and Threads. It will be replaced by Community Notes, a concept popularized by Twitter (now X) which enables users to work together to identify inaccurate information and write labels explaining such on individual posts.

Frankly, this is pretty worrisome. Community Notes are good in theory, but I don’t know that we should trust the community writing the notes. I’m not going to defend Meta’s fact-checking system as it’s often been wrong and has shown inappropriate bias at times. Not typically in the ways oligarchs want us to believe, but it happens. I’ve had my own weird run-ins with content moderation issues and slow responses from Meta’s Trust and Safety team in my work both as a social media manager in the news industry from 2014-2020 and as a digital strategist in the higher education space since then.

A study conducted in 2024 found that Community Notes on X were not displayed on as much as 74% of misleading posts about the U.S. presidential election even in instances where corrected notes were made available. That’s an atrocious record and something that we can expect to repeat on Meta platforms when control is ceded to the platforms’ power users instead of trained professionals.

Even in situations where community moderators are active and trying to do good, the anonymous nature of these system will create confusion and manipulation by bad actors. If you’ve been on Facebook at all in the past 10 years, you know just how bad their moderation system is. I can’t imagine handing that over to the people of Facebook will be any better.

News and Political Content

“People don’t want political content to take over their News Feed.” – Mark Zuckerberg, February 2021.

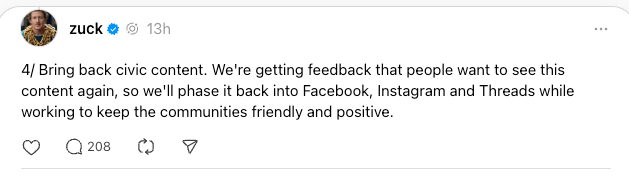

January 2025:

The man is an opportunist and little else. It’s clear that he is not leading Meta with a vision for the future, but as a propaganda tool he can use to gain favor with the U.S. government’s ruling party—no matter who that is. A 2021 Biden administration influenced Mark to pull back political content after years of harassment and misinformation under the previous Trump administration, and a new 2025 Trump administration influenced him to go right back to where we started: Allowing hate speech and misinformation to run unchecked by his company.

There are many reasons why this is bad, but let me provide you one terrifying real example:

Facebook played a significant role in amplifying hate speech and violent rhetoric that contributed to a genocide in Myanmar in 2017. Amnesty International released a report detailing the atrocities in 2018.

“Meta’s algorithms proactively amplified and promoted content which incited violence, hatred, and discrimination against the Rohingya – pouring fuel on the fire of long-standing discrimination and substantially increasing the risk of an outbreak of mass violence. The report concludes that Meta substantially contributed to adverse human rights impacts suffered by the Rohingya and has a responsibility to provide survivors with an effective remedy.” – Amnesty International

This is what happens when moderators step aside and let the platforms work themselves out. “Free speech” is a guise meant to appeal to Americans’ most valued principles: Freedom.

A Dangerous Step Backward

Meta’s decision to ditch professional fact-checking for a crowd-sourced approach isn’t just a simple policy change, it’s a dangerous step backward. We’ve seen what happens when platforms prioritize engagement over accountability: misinformation spreads faster, hate speech runs rampant, and in the worst cases, real-world harm follows. The tragedy in Myanmar should have been a wake-up call, not an instruction manual.

Let’s be clear, this isn’t actually about free speech or empowering users. I believe it is about Meta protecting its bottom line while deflecting responsibility away from the harm it causes. Mark Zuckerberg isn’t leading with a vision for the future; he’s just playing politics, switching sides depending on who’s in power. And while he profits, the rest of us are left dealing with the consequences of platforms that amplify lies and division.

We’ve seen this story before, and we know how it ends.

Leave a comment